Next LoCoRepo Challenge: CI/CD

On my way of starting my own service, the next challenge I came across was to implement CI/CD for my project after I finished a first rough implementation of DDD.

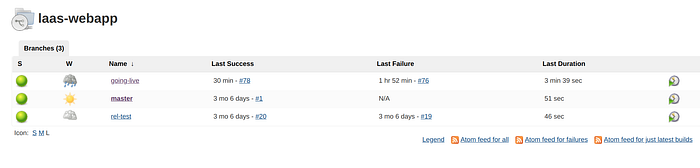

My first decision was to use Jenkins pipelines because then I will have my build as part of my code also. Jenkins has a special job called “Multibranch Pipeline” which expects you to have a Jenkins file on your source code. If you have that, Jenkins will only ask for the project source code git URL, and then it will create on Job for each one of your branches and will monitor these branches for you. If you make a change on a branch, the Multibranch setup will detect that and will trigger a new build for that branch (of course there’s a lot that can be configured here, but the idea is the same).

Now what did I do in my Jenkins file? Almost nothing! I used to have a Jenkins library where I would pass in some parameters and the library was “Smart enough” to know what to do. This went down pretty fast for my application because of my first wrong line of thoughts which was “all maven builds are almost the same”.

For LoCoRepo, I had a multi-module project in which:

- There are a bunch of modules with their unit tests which are either libraries or runner applications (more like an assembly module).

- There is one module-test per micro-service which tests a specific micro-service using Cucumber. Whatever “External Resources” required by the test would usually be available through embedded version of the resource or docker containers thanks to TestContainers.

- There is one integration-test for the whole application. The idea is to do a full end-to-end test on the application. Again, tests are implemented in Cucumber but this time we need the whole application. Ideally, we should do the identical steps as the production deployment process on somewhere, run our tests and then throw away the deployed application.

The first type of modules was easy. The modules tests were a bit more challenging. I like the idea of running a build inside a docker container because:

- I have more control over the build environment. For example I could say I want to run my build in a “maven:3” docker image, then I don’t need to care about the Jenkins which is running the application or if it has maven installed or not. As long as it supports pipelines, my build can start!

But, as anything good on earth, there’s a price to pay:

- The default user on maven docker image is root. Similar challenges about permissions pushed me in a direction where I now create my own docker image with the same UID as the current user (Jenkins) and GID set to “docker” group id to let my unit tests to run docker containers.

- You would need to run docker inside docker.

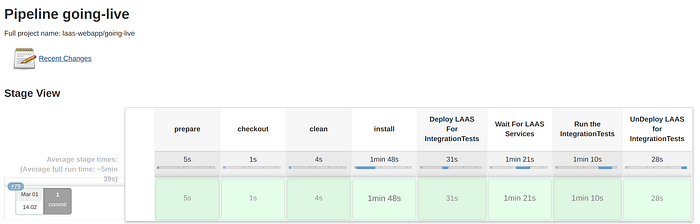

For Integration Test module, I did a lot more! FYI, the deployment tool I use for my app is Helm. This is what it looks like in the end (as you can see I have almost 80 failed attempts!):

The first four steps are not important, they are basically doing what we mentioned before. But the rest is trying to achieve the end-to-end integration test. First of all, I had to integrate my Jenkins to the minikube I was running on localhost and I had to give this Jenkins full cluster admin role on the kubernetes because it was supposed to deploy my application. Then on stage “Deploy LAAS For IntegrationTests” Jenkins will run a pod on kubernetes which has helm executable. Then using the helm repository which is now on my source code, I deploy my application to the kubernetes. Then the next stage “Wait For LAAS Services” will run a shell script on a pod inside kubernetes which will just wait for all my services to be ready by checking the Spring Actuator health endpoint. After all services are ready, the stage “Run the IntegrationTests” will start a maven pod on kubernetes and run a “mvn test” on the project. After all is done, stage “UnDeploy LAAS for IntegrationTests” will run another helm pod on kubernetes and then deletes the application.

There are lots of challenges (some still unresolved), but I am almost there (At least I have the building blocks required by CI). The challenges I faced are:

Challenge 1: I have 3 different types of tests and on each stage, I want to only run one of them. As I mentioned all my tests are JUnit tests, so I need special customization to skip some of the tests and run the rest.

Solution: I create 3 new parent modules. One for “libraries and runner applications”. Let me emphasize on one thing here, when you create a sub-module, then by default, the sub-module’s parent is set to the upper module but that’s not a rule and it’s actually better if you don’t do that! So, I have my tree of projects which shows the “child” relation, but for parents I have totally different POM modules. Using this setup, I would set the parent POM of “libraries and runner applications” to “normal parent”. These modules have Unit Tests. The parent POM defines a profile called “skipUnitTests” which will be activated if you pass a “skipUnitTests” property (the idea is copied from “-DskipTests”). On this profile I am setting the skip property of maven-surefire-plugin to true.

<profiles>

<profile>

<id>skipUnitTests</id>

<activation>

<property>

<name>skipUnitTests</name>

</property>

</activation>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-surefire-plugin</artifactId>

<configuration>

<skip>true</skip>

</configuration>

</plugin>

</plugins>

</build>

</profile>

</profiles>As you probably guessed, I have another parent POM for module-test modules with:

<profiles>

<profile>

<id>skipModuleTests</id>

<activation>

<property>

<name>skipModuleTests</name>

</property>

</activation>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-surefire-plugin</artifactId>

<configuration>

<skip>true</skip>

</configuration>

</plugin>

</plugins>

</build>

</profile>

</profiles>And one for the integration test module with:

<profiles>

<profile>

<id>skipIntegrationTests</id>

<activation>

<property>

<name>skipIntegrationTests</name>

</property>

</activation>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-surefire-plugin</artifactId>

<configuration>

<skip>true</skip>

</configuration>

</plugin>

</plugins>

</build>

</profile>

</profiles>Now, if I run:

- “mvn test -DskipTests” => it will skip all tests

- “mvn test -DskipUnitTests” => it will skip only unit tests

- “mvn test -DskipModuleTests” => it will skip only module tests

- “mvn test -DskipIntegrationTests” => it will skip only integration tests

Challenge 2: There is a problem with my current setup. If Integration Tests fail, my application will not be undeployed and has to be deleted manually. I googled around for this problem but I couldn’t find an easy solution to mark a stage to “always run” or use a kubernetes agent on “post” part of the pipeline. I think I will probably aggregate all the stages related to integration test into one giant stage with try-finally block to always undeploy the application.

Challenge 3: How can I parallelize this to improve performance? The biggest challenge I think I might have is not being able to run tests in parallel. The good news is if that problem is on one type of tests (e.x. module tests) I can still run other types in parallel thanks to the separation of modules by their test types.